Abstract

For any military operations, situational awareness is paramount. The military spends tons of time and resources to gather intelligence about an AO (area of operations) before they enter it, while they operate in it, and after they leave it. The process of gathering this intelligence from the perspective of the foot-soldier is largely analog. Soldiers analyze their environment with their own five senses. They use rudimentary electronics in some cases (bugging phones, intercepting radio transmissions, etc) and in even rarer cases, employ cutting-edge technology (pointed audio amplifiers).

Information about an AO is important to foot-soldier because he is the one on the edge making instantaneous decisions that have immediate consequences. Information about an AO is also important to higher command because it informs these macro-decision makers in their leadership and directive responsibilities of the foot-soldiers. AUGR enables real-time, passive, and automatic dissemination of visual information that describes a particular AO.

Problem Statement

Situational awareness can always be improved. Currently, we rely on the individual soldier to detect and manually share valuable information about an AO. As a result, there are huge blind spots in our understanding of AO as we conduct operations in it. These blind spots can be eliminated with cheap, small, disposable technology that has only recently become developer and consumer-friendly. Eliminating these blind spots could make a big difference in the safety and effectiveness of soldiers on ground missions.

Proposal

With AUGR, we propose a device roughly the size of a smartphone that can be dropped or attached anywhere in an AO and left to passively and autonomously gather data to then relay it to those who could benefit from it most. Work began on a prototype in 2019 and we have since made significant progress in the following areas:

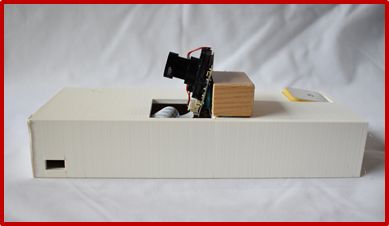

Scale: The AUGR prototype operates off of a Raspberry Pi with a battery pack inside of a 3D printed casing. It can fit in the palm of a hand and can be reasonably and discreetly placed in and around rugged operational environments. Further work in this regard involves more optimization of the packaging, hardware documentation, and processing power provided on the edge.

Object Detection: Our current computer vision system operating on the device is intelligently able to identify--and track--people and animals across a live video stream. These algorithms have been implemented with performance in mind in order to make the most out of the pi's built in processing power. Our detection system runs at or just slightly behind real-time computation. Further, we estimate distance and direction of all tracks at every timestep, ready to be sent off in a readable format to an end user.

Recognition: A branch of our detection system involves a facial recognition system which attempts to find faces on detected people, and then cross reference them to an encrypted library of facial data living on the pi itself. This allows the pi to detect unique individuals in real time without the need for excessive communication between the end user and an HQ.

Alerting: AUGR is currently linked to ATAK. If toggled, AUGR will automatically populate ATAK with the detections it finds, along with their positional data and a name (if a face was recongized). All AUGRs operating in an area can be connected to the same ATAK server, meaning that AUGR can create a network of situational awareness without any extra overhead beyond placing the device and turning it on.

This semester, we are seeking to further improve the hardware provided to AUGR (finding a better camera, providing more compute), as well as making the models which run on the device more robust (higher-fidelity object detection and recognition models). We also aim to solve the network problem with cheaper open-source mesh-network devices, which will allow AUGR to always be on a network without any external dependencies.

Challenges and Unknowns

The reason nothing like AUGR currently exists is largely due to the mundane details that make engineering projects difficult in general. In order to build an effective product, our solution must optimize for power consumption, durability, and cost. All 3 of these qualities are at odds with one another. This will require significant technical and logistical work, and might prove harder than we currently expect.

We are also attempting to build something versatile and modular. We must strike a balance between versatility and specificity. The user shouldn't have to do any engineering work to deploy our product. However, we want the user to be able to tailor the product as much as possible to his/her use case. For example, in order to deliver AUGR to the battlefield, a user might want to carry it in his ruck on his way out to the AO. Depending on the mission, the user might also want to strap an AUGR module to a drone and deliver it the AO that way. This question of versatility applies to both software and hardware, and is something that will have to be consider at every step of the design process.

OUSD Research and Engineering

OUSD Research and Engineering  West Point

West Point