Abstract

This project would be focused on a way to reduce the amount of manpower used for constant drone surveillance. Through the use of machine learning and facial recognition software, a target could be identified through a drone. This would then be paired with relative movement to the camera (ie the target moving in the camera's field of view) to move the drone and follow the target without any user input.

Problem Statement

Flying smaller drones, such as quadcopters, requires constant attention in order to both fly the drone and gather intelligence from the flight. This typically means that manpower must be spent on drone operation, reducing both the flexibility and capability of groups that are in combat zones. This project would hope to free up some of that manpower by allowing for troops to have a drone mode that is "set and forget." In addition, this mode would be independent of GPS devices, allowing for enhanced drone use in areas that might have limited GPS connectivity.

Proposal

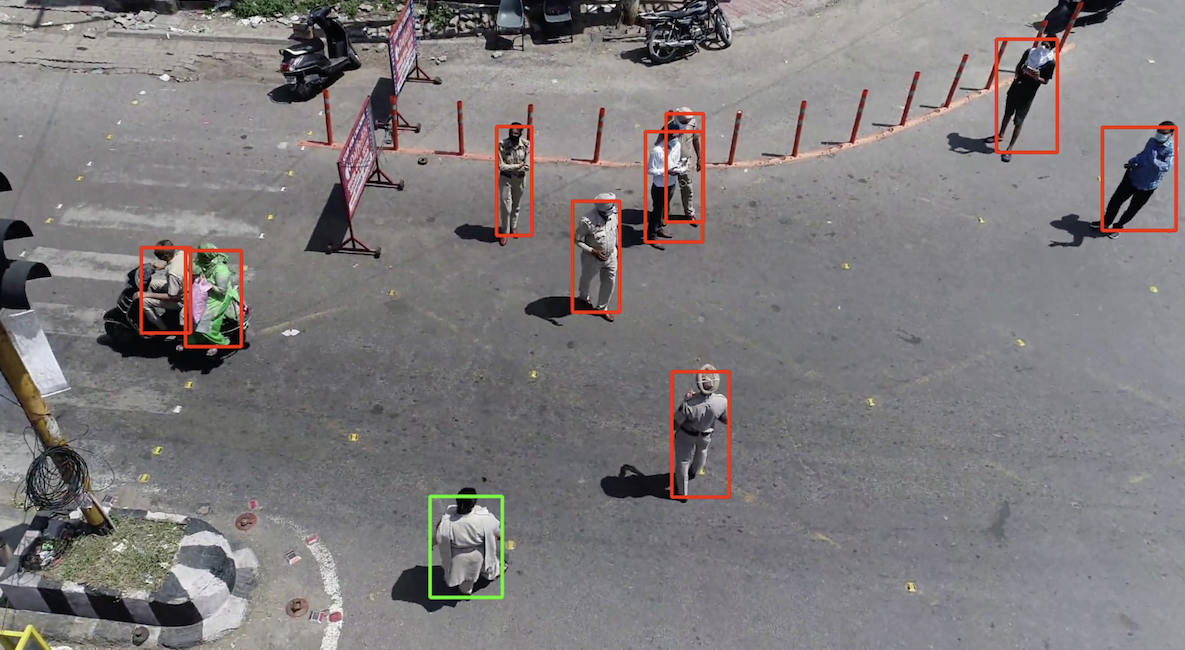

The project can be broken down into three parts with an additional component at the end. First, there is the target identification software. This could be done with facial recognition software in order for the drone camera to be able to pick up individual people or types of objects (vehicles, animals, etc.). A subset of this would be to use additional machine learning to pick out individual faces and highlight them. The next part involves giving commands based on how the target moves in the camera's field of view. This involves creating codes that figures out where a target is on the screen (can be done by using the same bounding box that highlights them in step one) and then gives commands to the drone to either rotate, translate, or possibly move in elevation. The added component is in this step as distance between the drone's camera and target is necessary to make accurate movements. Third and finally, the commands need to be piped into actual flight computer commands that the drone can understand in order to make the movement in the drone.

Challenges and Unknowns

Creating code that is highly interwoven with hardware, such as in this case, is not that easy of a task. Going through each part of the project description from above, several problems will be highlighted. First, in the object/target identification stage, there is the issue that you will typically not have a great facial view of a target. This means that the differentiation between other objects of interest and the target itself would be hard to actually integrate, possibly meaning that the operator themselves might have to select between objects of interest. While some work has been done to make satellites and higher flying UAVs better at identifying objects, it still will be a lot of work to make a semi-accurate identification software. In the second phase, while laser/LIDAR range finders might help to find the actual camera distance, there is the fact that target might at time be lost and the program would need to be able to sense that and not make unwanted/unpredictable commands. Finally, the actual command implementation has several tough problems. Home-made simple simulations, online virtual environments, and actual commands to a physical flight controller on drones all use different coding methods, so piping commands from the movement code is very tricky to do correctly. Therefore there would be a challenge in trying to learn and use any manufacturers SDK or even develop a simple simulation for demonstration of the tracking code. An alternative with trying to integrate with a commercial platform that is complicated and ever-changing would be to use an open-source flight controller, although middleware and software experience would then be incredibly necessary. Some of these issues have already ben solved by a group at the University of Washington who have already designed a similar system (https://www.groundai.com/project/eye-in-the-sky-drone-based-object-tracking-and-3d-localization/1), but it is slightly different.

OUSD Research and Engineering

OUSD Research and Engineering  West Point

West Point

Comments

andrew.heier | 17 February 2021

As you mention, a number of…

As you mention, a number of the components for such a capability have been proven out in academic prototypes and limited commercial offerings. Object detection is pretty easy and 3D slam is currently widely studied, and high accuracy isn't critical for target tracking. Have you thought about the flight controller? This is where the SOCOM use case diverges from most commercial applications.

Given a set of potential constraints imposed on the UAS by the operator, how should it optimally map and then surveil the environment? A real time planner will need to balance the need to explore and exploit. For example should it map both sides of a wall or revisit the location of potential targets. Mission parameters and sensor resolution limits make this hard.

awright | 19 February 2021

i like your idea. depending…

i like your idea. depending on the type of object you want to detect, you would need to consider the area over which you must look to find that object and the speed at which that object could travel. Thus a person face v a vehicle convoy would be significantly different given the walking speed of the person v the speed of the vehicle. Also, the environment and, as you note, sensor viewing matters a lot. Is the person in a crowd of other people that provide many false alarms? Or the vehicle in traffic? I think narrowing the use case to something specific like border patrol could help identify particular stimuli and drone scripted behaviors that would be relevant and useful.