Data Fusion

Overview

Today, there are more and more sensors on the battlefield. Some are in the air, some are in orbit, some are worn by soldiers, some are positioned on the ground, and so on. As this trend continues there will soon be an overwhelming amount of data created by all of these sensors, and it will become impossible for humans to effectively absorb it all and take the right action. Currently much of this kind of data is sent back to a headquarters office to be processed by people and software, and then the resulting information and recommendations are disseminated back out into the field to the people who are in a position to make a decision and act. This causes a significant delay and does not scale. Devise a system that solves this problem of turning data from distributed sensors into usable information, sending it to the right people, and doing so scalably as the number of sensors and the volume of raw data grows exponentially.

Abstract

Today, the volume of raw data being collected is growing at an exponential rate so much so that humans cannot process and analyze the data in a quick, efficient way for operators to use. Right now, the process of collecting data to creating usable data is happening at an extreme delay as data is being collected, then sent to a headquarters office where it is then analyzed and then finally sent back to operators. We plan to devise a plug-in for the ATAK system where data is collected and analyzed in real time so that the delay in collecting data and using data is virtually eliminated. This will allow operators to be more informed about the decisions they are making and will allow them to make decisions in a timelier manner as they are no longer waiting for data to be processed and analyzed—that happens on their devices as soon as the data is collected.

Problem Statement

Today, there are more and more sensors on the battlefield. Some are in the air, some are in orbit, some are worn by soldiers, some are positioned on the ground, and so on. As this trend continues there will soon be an overwhelming amount of data created by all these sensors, and it will become impossible for humans to effectively absorb it all and take the right action. Currently much of this kind of data is sent back to a headquarters office to be processed by people and software, and then the resulting information and recommendations are disseminated back out into the field to the people who can make a decision and act. This causes a significant delay and does not scale.

Proposal

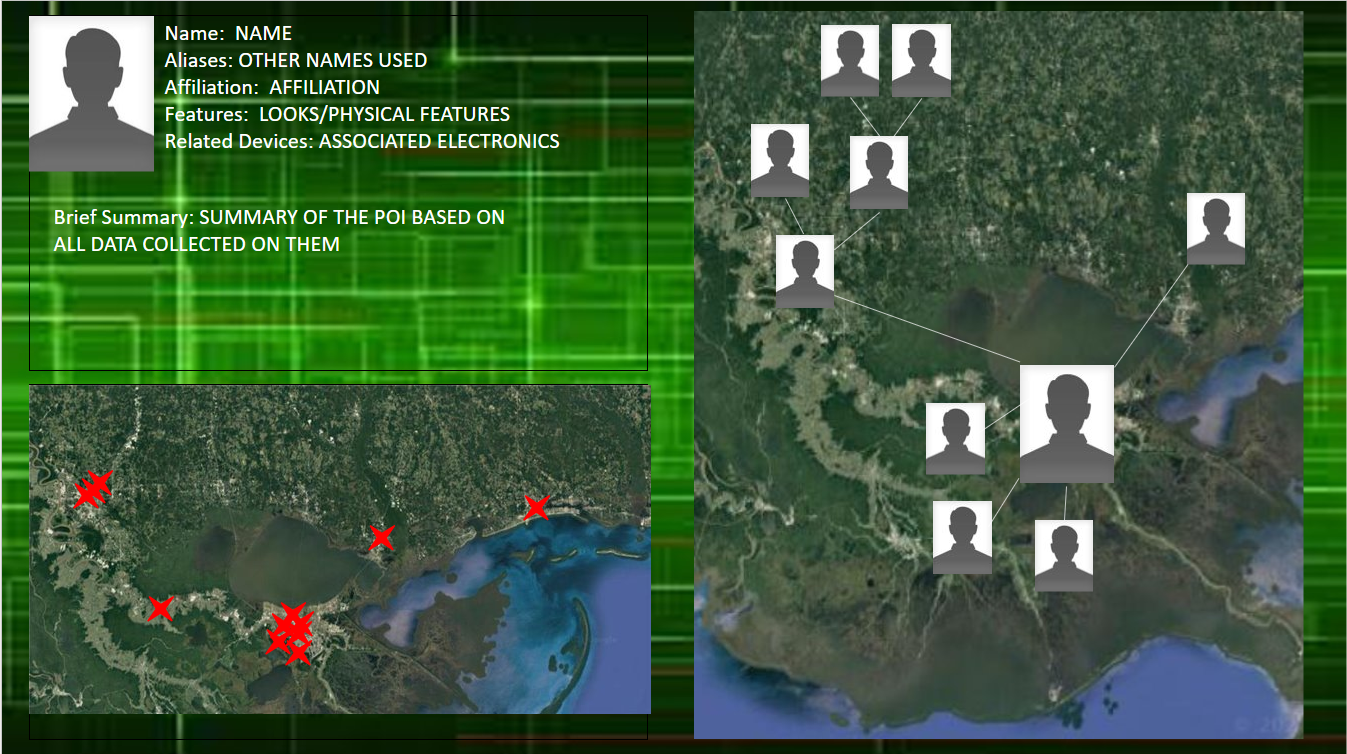

The goal of this project is to turn data from distributed sensors into usable information, send it to the right people, and do so scalably as the number of sensors and the volume of raw data grows exponentially. The ideal end state of the project is to create a plug in for the ATAK system where data sources can be inputted and analyzed right there, real-time, on the device. To accomplish this end-state, a large portion of the project will be spent researching different data sources, understanding how they are stored and how to merge the different data sources into a single, usable dataset. Once the data is in a usable format, we can talk to operators to see what type of a usable interface they would like to see employed, and we can build something that uses the dataset to create a usable interface that operators can use on the edge in real time.

Challenges and Unknowns

As stated in the problem statement, there are many different types of sensors on the battlefield, so an unknown we have right now is determining which data is the most common and where to focus our research efforts as we could research different types of data forever, but ultimately, to get the project started, we are going to have to pick a couple data sources and use those. We want the end state of this project to be of use to operators, so we would like to know what data sources to focus on and which data sources are most commonly seen/used. Another challenge we might have is getting access to “real” data as much of the data we are going to be looking at is probably classified at some level. In addition, if we do access the classified data, we are going to have to devise a way to keep the different levels of data separate as each data source is probably a different classification level.

OUSD Research and Engineering

OUSD Research and Engineering  West Point

West Point

Comments

dBlocher | 14 October 2021

I appreciate your focus on…

I appreciate your focus on end-user, and desire to research types of data used by soldiers. There are lots of challenging sub-topics in this area which you bring up - cross-sensor fusion and data formating, multi-level security, etc. As suggested, I would focus on identifying a couple of sources of data that would be prime candidates for fusion and focus on building the machinery to fuze that. In terms of data classification, you can always leverage an unclassified data set for development and the do your final testing and deployment on a "real" data set. Talking to some operators may be a good way to identify data sources that are ripe for fusion.

cwdavis3 | 10 November 2021

The problem described could…

The problem described could be broken into several very different (but necessary) components. For example:

1) Creating display formats that efficiently communicate a lot of disparate information. This is largely a Human Factors effort. How to use color, symbology, etc.; plan-view map, or user (ground) perspective, tables of info (i.e. range to target).

2) Communications networks for low latency collection of data from sensors, and dissemination of processed information to edge users. (This includes the multi-level security challenges you mentioned.)

3) Analytics to create condensed rich displays for user. (For example, creating imagery with pertinent metadata labels: label buildings as market, pharmacy; indicate water and power shut-off locations or choke points; annotate blind alleys vs through-ways. Draping imagery on 3D renderings.

4) Ways for end user to customize display for their specific mission that day. Pre-mission customization of display real estate, and selection of priority data feeds. Dashboard indicators to alert when new data has come in.

The problem area is so broad, you might pick a specific example to demonstrate. Item 4 might provide some bite sized challenges that could be addressed. Services and data products from the other areas could be mocked up to do a demonstration. You could demo concepts without having to get hung up on the idiosyncrasies of specific sensors.