Abstract

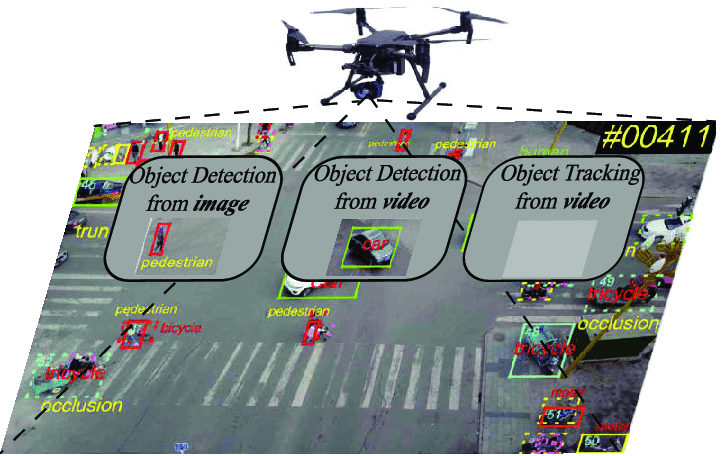

Object detection plays a central role in autonomy with a variety of applications including search-and-rescue, remote sensing, security, and surveillance. Autonomous systems must be able to accurately identify in real-time a multitude of objects across challenging environmental conditions. Using RGB and thermal IR (RGBT) sensor fusion, deep learning methods such as Convolutional Neural Networks (CNN) can be utilized to identify objects more accurately, while maintaining timing and SWaP constraints.

Problem Statement

FY23 Challenge 1C. "On-board Perception."

A UAV, specifically a quadcopter, offers precision control and the ability to operate in urban or condensed environments. However, a UAV that constantly requires attention can diminish the mission focus without clear added value. Through fast and accurate object detection, a UAV could alert the user only on pre-defined triggers (i.e., 4 pedestrians or a vehicle detected).

UAVs operate in challenging environmental conditions with varying levels of illumination. The addition of a thermal IR camera is a natural choice, making object detection a sensor fusion problem, necessitating new ML models and techniques. The constraint of on-board computing further restricts this scope of algorithms.

Proposal

Beginning with an open-source development UAV, specifically the VOXL2 Sentinel, this project aims to explore and test various deep learning models trained for object detection. Starting with a ready-to-fly platform already equipped with GPS navigation, obstacle-avoidance, and other features allows the research focus to be entirely on the object detection aspect, and it makes the project more robust. The VOXL2 model in particular is an apt choice because its hardware is specifically designed for machine learning applications, and it has the capacity to run five neural networks in parallel.

Through the addition of a wide-angle Long Wave Infrared (LWIR) thermal camera, such as the Teledyne FLIR Boson, there is potential for sensor fusion. This approach is dubbed “RGBT sensor fusion”, and while mentioned semi-frequently in UAV object detection literature, there are not a lot of results, instead mainly focusing on RGB cameras.

The general network architecture would be one-stage, which sacrifices accuracy at the expense of speed. Building off of existing Single Shot Detection (SSD) and You Only Look Once (YOLO) models would be the natural extension. For average performance, YOLOv3 performed well overall, but the newest YOLOv5 might be a stronger candidate (Wu et al., 2021). Another model, MobileNetV1-SSD would also fit well. These models would be adapted using Tensor Flow Lite, which allows for simple implementation on the VOXL2 and can be trained on the Princeton Research Computing clusters.

Datasets

With any deep learning project, large, annotated datasets are required. Multiple publicly available datasets have been identified as potential candidates, and data augmentation (rotation, cropping, scaling) would occur to adapt it to the specific sensor platform. As a last resort, manual annotation could be performed, but it would be prohibitively expensive.

UAV:

- Stanford Drone Dataset 2016. It consists of RGB videos with ten object categories, taken from an altitude of 80m. (https://cvgl.stanford.edu/projects/uav_data/)

- Car Parking Lot (CARPK) dataset. It consists of RGB videos with vehicle counting, taken from an altitude of 40m. (https://lafi.github.io/LPN/)

- DroneVehicle dataset. It consists of RGB and infrared images divided into 5 categories. (https://github.com/VisDrone/DroneVehicle)

- BIRDSAI dataset. It consists of IR videos taken from a fixed-wing, divided into 8 mostly animal categories. (https://sites.google.com/view/elizabethbondi/dataset)

Vehicle:

- Teledyne ADAS FLIR. It consists of annotated RGB-thermal pairs divided into 15 object categories. (https://github.com/princeton-computational-imaging/SeeingThroughFog)

- KAIST Multispectral dataset. It consists of RGB-thermal, stero, and lidar frames. KAIST Pedestrian 2017 in particular has annotations for RGB-thermal pairs divided into 3 object categories. (https://github.com/SoonminHwang/rgbt-ped-detection)

- SeeingThroughFog dataset 2021. It consists of RGB, lidar, radar, and NIR sensors across weather conditions. (https://github.com/princeton-computational-imaging/SeeingThroughFog)

Resources

Through SOCOM Ignite, I am planning to receive all critical hardware, principally including ModalAI VOXL2 Sentinel drone and most relevant sensors (ex: FLIR Boson, Chameleon3, etc).

Through my university, I have applied for $1100 in funding and will request access to the Princeton Research Computing clusters for model training. Additionally, I may request Microsoft Azure cloud computing credits. Any extra funds would go toward higher quality or different sensors, depending on the progress of the project.

Challenges and Unknowns

Datasets

The largest challenge will be with selecting the correct datasets and any necessary augmentation. Most of the listed datasets vary greatly in terms of resolution and annotation, which can play a major role in accuracy. This is particularly relevant if any of the vehicle perspective frames are selected. Additional recommendations of datasets would be greatly appreciated.

Effective fusion

Other methods have been proposed with RGBT fusion, but the crux of the issue is the model knowing when and how much to weight the RGB image versus the infrared. This complementarity will require substantially more research to investigate fully.

Testing limitations

With a quadcopter platform and extensive on-board computing, flight time may be substantially limited due to battery constraints. Additionally, real-world scenario testing would need to replicable in a realistic environment.

References

X. Wu, W. Li, D. Hong, R. Tao and Q. Du, "Deep Learning for Unmanned Aerial Vehicle-Based Object Detection and Tracking: A survey," in IEEE Geoscience and Remote Sensing Magazine, vol. 10, no. 1, pp. 91-124, March 2022, doi: 10.1109/MGRS.2021.3115137.

Note: initial graphic from Wu. et al.

OUSD Research and Engineering

OUSD Research and Engineering  West Point

West Point