Data Fusion

Overview

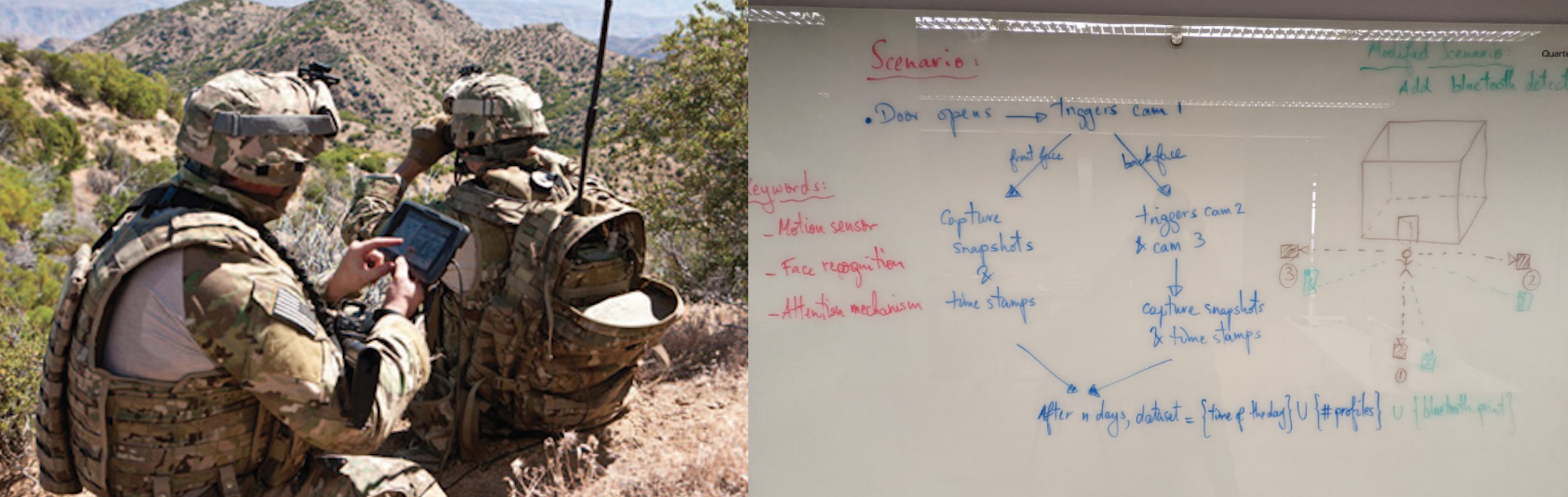

Today, there are more and more sensors on the battlefield. Some are in the air, some are in orbit, some are worn by soldiers, some are positioned on the ground, and so on. As this trend continues there will soon be an overwhelming amount of data created by all of these sensors, and it will become impossible for humans to effectively absorb it all and take the right action. Currently much of this kind of data is sent back to a headquarters office to be processed by people and software, and then the resulting information and recommendations are disseminated back out into the field to the people who are in a position to make a decision and act. This causes a significant delay and does not scale. Devise a system that solves this problem of turning data from distributed sensors into usable information, sending it to the right people, and doing so scalably as the number of sensors and the volume of raw data grows exponentially.

Abstract

In the modern battlefield, operators need a system that solves the problem of turning data from distributed sensors into usable information and quickly sending it to the right people while also being scalable. Through creating a modular and flexible platform for operators, systems can be tailored to fit the needs of the mission rather than having it the other way around.

Our solution will encompass both the hardware and software required for operators to setup remote surveillance operations without using complex and power hungry devices, and be easy to setup and configure and modify in the field. Secondly, AI and edge processing will be used to reduce the amount of irrelevant data being sent to analysts.

Problem Statement

How can we maximize the battery lifetime of a sensor suite, while also ensuring that only relevant data is communicated back to the operator?

How can we make the configuration of this sensor system simple enough to be operator friendly, while also remaining versatile enough to handle any possible mission?

Proposal

Utilizing a GUI(Graphical User Interface), we will create a compatible programming language for the sensor configuration to enable operators to employ a sensor suite in any configuration required for the mission. The system will also be compatible with various standard sensors found in everyday devices to make it easier to setup in the field with limited resources. Increasing the runtime of the sensor suite is extremely important, so reducing power consumption will be a priority for all aspects of design.

Software

- GUI that operators use to set up the configuration of the sensor suite

- Code to connect the inputs and outputs of multiple sensors together seamlessly

- Eventual integration of facial recognition and voice recognition (machine learning) to collect and send back only the relevant data

- ATAK compatibility for operators to configure the sensor suite in the field

- Runtime optimization to reduce power consumption

Hardware

- A prototype of the software solution, using several common sensors operators may encounter in the field. (i.e. a microcontroller, PIR sensors, microphones, cameras)

- Minimize the power usage of off the shelf components and sensors through easy to complete modifications that operators could perform themselves in the field.

Challenges and Unknowns

- Battery size limitations

- Limited data storage and transmission

- Durability/length of operation

- Reducing false positive system activations

- Ability to be used by untrained operators

- Compatibility with off-the-shelf sensors/hardware

OUSD Research and Engineering

OUSD Research and Engineering  West Point

West Point