Abstract

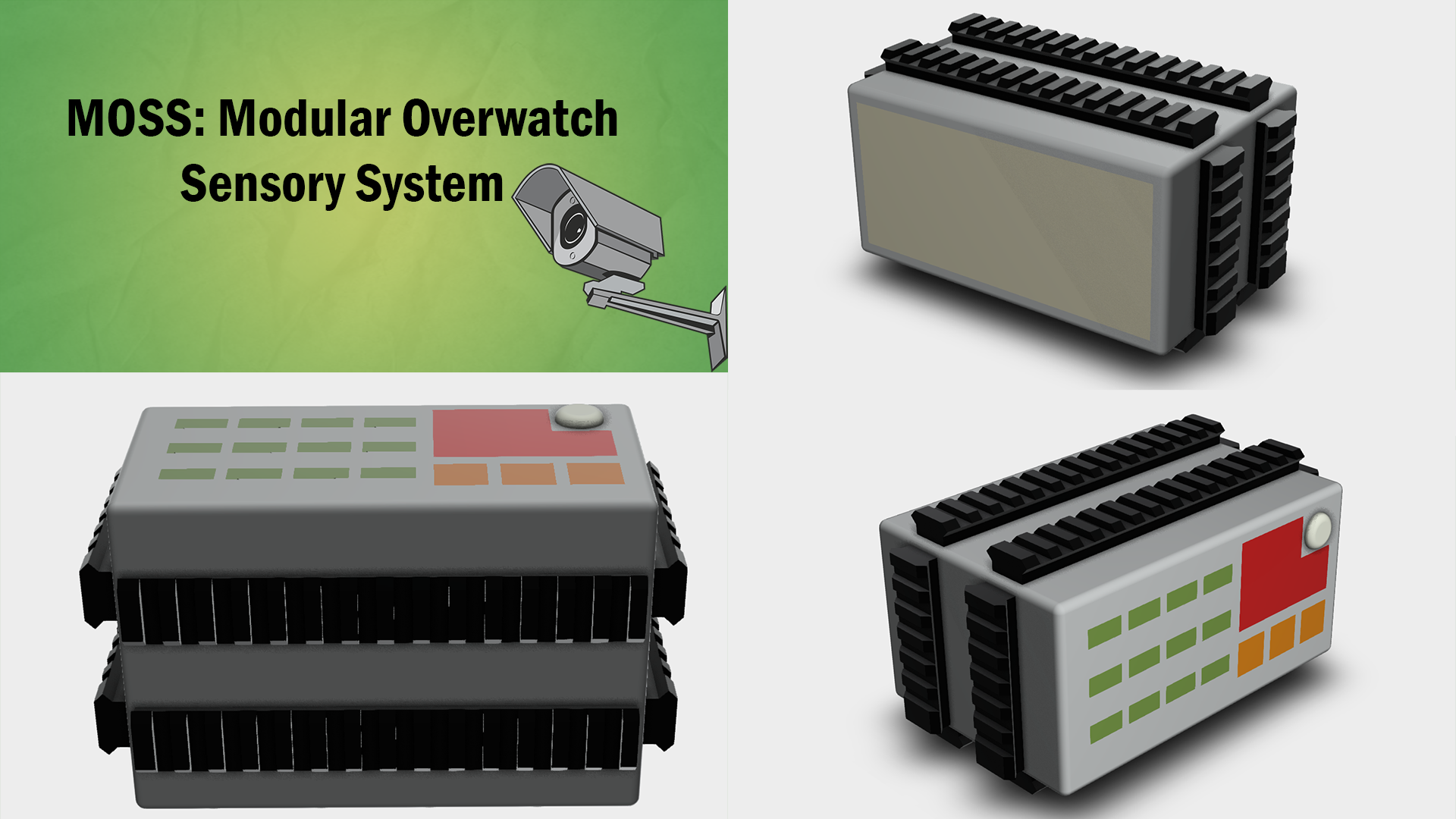

The way we currently collect sensory data is very complex and disconnected. Each sensor or data source is handled separately by an operator at HQ who has to analyze, understand, and then disseminate the right information to the right person in the field. Since oftentimes this information is time sensitive, the current system can put operators in greater danger if the information becomes obsolete. Our project, Modular Overwatch Sensory System (MOSS), focuses on automating the accession and processing of sensory data on a modular platform and eliminating the need for a stand alone operator to analyze sensory data.

Problem Statement

Operators face an increased level of danger when all available data is not readily accessible by them. The issue we face is not one of a lack of data or sensory collection methods, but an issue of timely data analysis and delivery. Devise a system that solves the problem of turning data from distributed sensors into comprehensible information, sending it to the right people, and doing so scalably as the number of sensors and the volume of raw data grows exponentially.

Proposal

MOSS is a hardware and software solution with three primary goals:

- Scalability--ability to switch in and out different sensors, additional sensory modules, and separate data streams, pairing that with an equally scalable software application that can still provide useful information to the operators when some sensors are not available.

- Versatility--independent of time and environment, whether it's raining or dark, the system will continue to provide dependable and vital information from the areas of coverage. The hardware modules can be deployed statically for surveillance or on a moving platform, such as a UAV or RC vehicle through a remote connection.

- Accuracy and Usefulness--the system’s main effort is to provide accurate and useful information to the operators, that is also easily digestible. This can include personnel, affiliation, weapons, location, and direction of movement, and more depending on the types of data being analyzed.

On the hardware front, MOSS seeks to create a standardized sensory module that can be deployed in any type of environment to read and record the raw data from the operators surroundings with a minimum cost of ~$90 per module and a maximum cost of ~$200 per module, depending on intended use. As the need for larger areas of coverage increases, operators can easily deploy more sensory modules to cover larger or more distributed areas, and can even utilize encrypted and secure remote connections. Hardware modules will make use of small, distributed, low-power computers to do some of the data processing and formatting before sending the data to the central unit. Currently, we are looking at prototyping this system on a Raspberry Pi 4B as the distributed computing platform (accounts for $35 of the total cost).

All data MOSS collects will then consolidate into the central processing unit where MOSS’s software implementation can intelligently sort through the data. This central unit takes the form of a computer or mobile device that is in the hands of the operators and connected to all deployed sensory modules--both physical and network connections--to provide them the information most directly. The main component of the software implementation, and what allows for the software to be intelligent and flexible, will be machine learning, which we can use to discern and even identify faces, bodies, and objects (such as weapons) to create patterns of life for possible key suspects. MOSS will be built on open source software, with current plans to implement fully with Python.

At the most basic level, our system would include the following:

- A sensory module with at least a camera connected to it (can be any other single sensor, camera recommended).

- A central computer to run the application and connect the sensory module to (an MSD would be a great example that is already in military supply chains).

- The software loaded onto the sensory module and the central computer.

Challenges and Unknowns

- Though machine learning has come a long way in the last few years, with many easily usable, accessible, and open source libraries available for use, the biggest challenge with using machine learning will be ensuring that results are accurate--this may require operator interference, or we could work towards building several software and hardware contingencies to reduce the likelihood of bad data.

- Though physical connections between modules and the central unit are mostly simple, encrypted remote connections will be much harder to deploy, especially when taking into consideration possible areas of operation where there may not be networks in the first place.

- The operational environments operators may find themselves in are not conducive to powering large data gathering and processing systems. Possible solutions may include solar power or other contingent power sources.

OUSD Research and Engineering

OUSD Research and Engineering  West Point

West Point

Comments

RA23195 | 26 January 2021

Team MOSS,I like your idea…

Team MOSS,

I like your idea of attempting the processing at the edge using the Pi4 4GB or 8GB. There is a lot of support and open tools you can leverage to get started. Besides the camera, can you think of other sensors you can integrate to the Pi that could be useful? Assuming you are able to perform the analytics directly on the pi, could you envision a distributed network of Pis working in tandem and generating a single data feed easily accessible?

saidkharboutli | 28 January 2021

Raoul, Thank you for the…

Raoul,

Thank you for the feedback! The other sensors we are currently thinking of integrating are:

In regards to your second question: the fundamental idea behind MOSS is bringing in these many data streams from several sources and making them work together to give the operators the data they need; thus, the network of distributed Pis working in tandem--and our machine learning layers, parsing the given data in real-time--is what we envision to be the major goal of the project as a whole. The split in analytical load between the central computer and the Raspberry Pis isn't entirely clear just yet--we will likely have to test the abilities of an average field-deployable computer and a set of Raspberry Pis and find an effective split in analytical workload between the distributed Pis and the central hub. To clarify, however, we also aim to implement support for digital data streams (i.e. an mp4 file or continuous satellite imagery) outside of the data being fed by the hardware modules (and the software can be used independently of these modules).

The key to the flexibility of real-time data processing, will come from our usage of several machine learning "layers". Unfortunately, we do not have general artificial intelligence just yet, but we have easily accessible and open source machine learning libraries that work very well for select tasks (speech, video, or facial recognition, as examples). We want to use these different machine learning libraries as "layers" in our data processing, such that the video ML will search for faces or trained objects (such as a car or a weapon) while the speech ML is processing the data for key words. Or if we have an ML trained to identify objects of interest in satellite imagery (a large building or a gathering of vehicles, for example), we feed that ML layer to the given data stream of satellite imagery.

I hope this gave you a better idea of our goals and answered your questions! It would be my pleasure to answer any other investigative questions you may have for us.

Thank you!

kcole | 8 February 2021

Perhaps an obvious extension…

Perhaps an obvious extension / decision - have you considered distributing the algorithms you are considering closer to the sensors? As described, the distributed computers are primarily focused on formatting and sending. Although not without disadvantages (omplexity / scope creep / cost / etc.) there are some key advantages (reduced comm bandwidth, non-singular points of failure, latency, etc.) to simple distribution of the processing as opposed to communication to a central processing unit.

scwolpert | 9 February 2021

I really like your concept…

I really like your concept of picking a few sensors to "layer together" that you made in your comment. Sometimes ML confidence scores can be shaky, but add-in other sensors, and you can really improve the quality of the data. For example, if you get detections from the motion/PIR sensor + sound + imagery, you know it's likely the real thing, and would helps reduce "drowning in data". Also, using other sensors can help ground truth the imagery, so any future training is easier.

A few thoughts:

Awesome!

dBlocher | 9 February 2021

This is very good grand…

This is very good grand vision. I agree with all of the above that it would be good to push as much as you can to the nodes lest you end up with a system that doesn't scale or that has very high comms requirements to push data around. In that sense, identifying the key pieces of information to be sent back from individual sensors would be helpful - do sensors need to chip out pictures of faces to send back? What type of data bandwidth would such a system require at levels and would that look like a fiber backbone to each sensor, fit inside the bandwidth of a walk-talkie sized channel or be smaller? For the sake of scoping you might stick to one sensor modality (IR or EO) for actual hardware development with perhaps a block diagram illustrating how other types of sensors would fit in. Finally, it might be worthwhile to make the mission more concrete - what exactly mission(s) would your network of sensors enable - having a good sense of the mission would help backfill the questions of what ISR modalities you need and how to architect them.

Marsh309 | 2 March 2021

Great job team, I like the…

Great job team, I like the initiative and creativity in designing this solution. I fully agree with Dr. Blocher in establishing mission parameters for your project. Having a set function (i.e. supported mission type) can significantly reduce scope creep by solidifying your project goal.

I would recommend using concept mapping to identify the mission type (offensive, defensive, surveillance, reconnaissance, etc.) and pair it with an associated intelligence goal (HUMINT, SIGINT, IMINT, etc.). For example, if you wanted to use these for surveillance missions (which could last several days/weeks), you would need to focus on energy conservation. Therefore, you would most likely be conducting intermittent collection which is where your detection sensors may be beneficial.

I see a lot of potential in this idea, but don't get overwhelmed by the possibilities. Pick a specific goal, refine the project, then expand as technology/time/funding/need allows. Again, great work!